In December 2011 Google announced they were adding smartphone crawling to their mobile crawler Googlebot-Mobile, which at the time handled feature phone crawling. The key sentence from the announcement above was:

The content crawled by smartphone Googlebot-Mobile will be used primarily to improve the user experience on mobile search. For example, the new crawler may discover content specifically optimized to be browsed on smartphones as well as smartphone-specific redirects.

This new functionality from Google produced some very interesting behaviour in the mobile search results. In March 2012, I provided the research that helped Cindy Krum put together a piece for Search Engine Land on the impacts of the new smartphone crawler from Google.

Fast forward to January 2014 and Google announced another substantial change to how they were going to handle smartphone crawling moving forward. To simplify configuration for webmasters and in response to the prolific growth in smartphone usage, Google moved smartphone crawling from Googlebot-Mobile into Googlebot.

Google subsequently published new recommendations and guidelines for making a website mobile friendly. After reading through this documentation, there were still quite a lot of questions unanswered and it wasn’t clear if anything about Google’s position in 2011 had changed now that smartphone crawling had a new home within Googlebot.

Current State Of Play

Since June 2012 responsive web design has been the recommended approach by Google, however they also support mobile specific websites and dynamic serving. Websites that use responsive web design are helpful for Google, as they crawl the site once with Googlebot and get all the information needed. It gets more complicated for website owners and Google when mobile specific websites or dynamic serving is involved and this is where Googlebot (smartphone) plays a role in helping Google understand what user experience a website delivers.

The role of Googlebot-Mobile when it was crawling with a smartphone user agent or now Googlebot (smartphone) is quite well understood for mobile specific websites. The smartphone crawler from Google will detect user agent based redirects, faulty redirects, mobile app download intersituals and a variety of other elements. Google uses this information to optimise the search experience for users by linking directly to mobile content and avoiding redirects where possible, correctly returning mobile optimised URLs thanks to rel=”alternate” tags and so forth.

What isn’t that well understood is how Google handles dynamic serving and what role Googlebot (smartphone) might play in that now that crawling responsibilities have been moved from Googlebot-Mobile over to Googlebot.

To help gain some additional clarity on the impact of dynamic serving in SEO, not just for mobile SEO but search engine optimisation in general – I put together a series of tests. The tests weren’t meant to be exhaustive but aimed to cover off enough functionality to better understand the impacts and risks of using dynamic serving and what role Googlebot and Googlebot (smartphone) may play in it.

Algorithms Determine If Smartphone Crawling Is Needed

Initially the tests were deployed onto Convergent Media, which runs WordPress and uses a responsive web design template. It only took a couple of days for Googlebot to discover and begin crawling through the test setup. After waiting a week, still no Googlebot (smartphone) – which I thought was odd at the time. Waiting, more waiting and more waiting, still no Googlebot (smartphone) crawling of any of the test URLs.

I reached out to John Mueller to ask about the situation I was seeing unfold and he said:

We don’t crawl everything as smartphone, but when we recognize it makes sense, we’ll do that. For responsive design, the good thing is that we don’t need to crawl it with a smartphone — once crawl is enough to get all versions.

Now it makes sense why Googlebot (smartphone) wasn’t visiting the test setup, Convergent Media uses a responsive web design and Google’s algorithms had decided it wasn’t needed.

It is worth noting that despite Googlebot discovering the test URLs and those URLs sending signals such a HTTP Vary response headers, it wasn’t strong enough a signal to trigger Googlebot (smartphone) to visit the site. If there were more pages in the site that were not using responsive web design, maybe that’d have caused Googlebot (smartphone) to visit for example but it wasn’t happening as part of the test setup.

Google have a vast amount of computing resource but double crawling every URL on the web was obviously out of the question. The comment from John when we recognize it makes sense got me thinking about the traits that Google might be looking for in a website that might trigger Googlebot (smartphone) to begin crawling a site such as:

- discover common ‘mobile website’ style links on the desktop website

- discover links to mobile app stores, suggesting website owner is switched on/aware of mobile specific user experiences

- discover rel=”alternate” mobile tags on desktop

- discover HTTP Vary response header on desktop

- m/mobile subdomain verified in Google Webmaster Tools

- discover m/mobile subdomain XML sitemap referenced via desktop robots.txt file as a cross domain submission

- discover m/mobile subdomain

- crawl m/mobile subdomain taking note of key HTML elements like meta viewport

- crawl m/mobile subdomain taking note of common HTML/CSS/JavaScript frameworks in use, such as jQuery Mobile

- …

If you’re wondering why your site isn’t getting the attention you think it deserves moving forward from Googlebot (smartphone), it’d be worth considering some of the above points and others with respect to sending Google the right kind of signals that you’re in the mobile space.

Googlebot (smartphone) Mobile SEO Tests

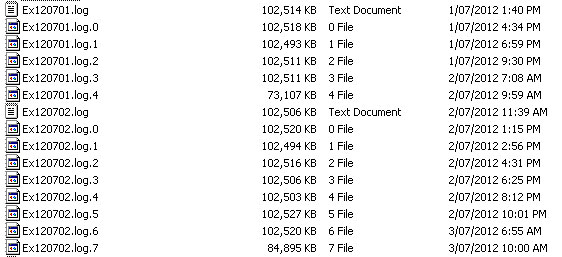

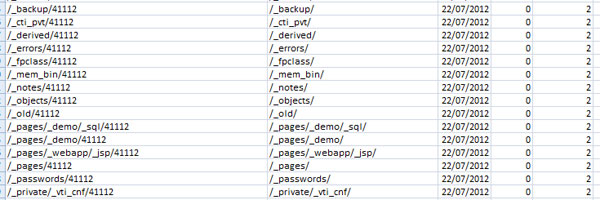

Not wanting to be dissuaded from completing the test I reached out to Dan Petrovic of Dejan SEO. While the Dejan SEO website runs over WordPress, it doesn’t currently have a responsive web design implemented. I asked Dan if he could check for Googlebot (smartphone) activity and if he’d be willing to host my test files, the answer was yes on both counts!

Eight tests were implemented with a goal to determine:

- if there are crawling differences with/without the HTTP Vary response header

- if URLs served only to Googlebot (smartphone) are used for discovery

- if URLs crawled only by Googlebot (smartphone) are indexed

- if meta robots noindex tags served to Googlebot (smartphone) are actioned

- if rel=”canonical” tags served to Googlebot (smartphone) are actioned

- if HTTP X-Robots-Tag noindex headers served to Googlebot (smartphone) are actioned

- if HTTP Link rel=”canonical” response headers served to Googlebot (smartphone) are actioned

- if anchor text seen by only Googlebot (smartphone) has an impact on rankings

Test 1

Aim: Determine if there are crawling differences with/without the HTTP Vary response header.

Implementation:

Four files were created, file 1 & 2 serve the same content to both Googlebot/Googlebot (smartphone), adding a Vary header in the latter. Files 3 & 4 serve different content based on the user agent, adding a Vary header in the latter.

Results:

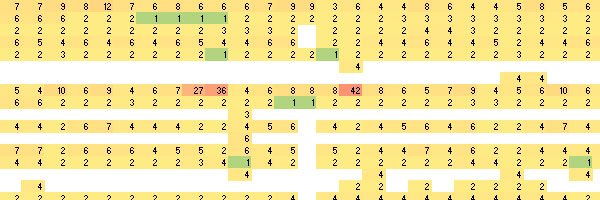

No measurable crawling differences in the URLs. This could simply be that the sample size is very small or that adding a HTTP Vary response header on its own isn’t a sufficiently strong signal to influence crawl rate of Googlebot (smartphone).

Test 2

Aim: Determine if URLs served only to Googlebot (smartphone) are used for discovery.

Implementation:

Two test URLs were setup using dynamic serving, the latter of the two also uses the HTTP Vary header. The mobile versions seen by Googlebot (smartphone) both link to unique URLs not seen by Googlebot. The unique URLs are available for both Googlebot/Googlebot (smartphone) to crawl.

Results:

Only the two desktop URLs were indexed. The dynamic served content on those URLs can’t be queried for in Google successfully. The unique URLs only seen by Googlebot (smartphone) have been crawled by Googlebot (smartphone) only but have not been indexed.

Test 3

Aim: Determine if URLs crawled only by Googlebot (smartphone) are indexed.

Implementation:

Both tests use dynamic serving, the latter of the two also uses the HTTP Vary header. The mobile versions of both tests link to unique URLs not seen by Googlebot. The unique URLs are crawlable by Googlebot (smartphone), however Googlebot will receive a HTTP 403 (Forbidden) response code.

Results:

Only the two desktop URLs were indexed. Googlebot (smartphone) accessed the unique URL associated to file 1, however like Test 2 – it wasn’t indexed. Unfortunately Googlebot did not access either of the unique mobile URLs to receive a HTTP 403 response code however I suspect the outcome wouldn’t have changed.

Test 4

Aim: Determine if meta robots noindex tags served to Googlebot (smartphone) are actioned.

Implementation:

Both tests use dynamic serving, the latter also uses the HTTP Vary header. The mobile versions of both tests include a meta noindex tag that isn’t in the desktop HTML counterparts.

Results:

Both the desktop URLs are indexed. It appears the meta noindex tag served to Googlebot (smartphone) was ignored.

Test 5

Aim: Determine if rel=”canonical” tags served to Googlebot (smartphone) are actioned.

Implementation:

Tests 1 & 2 have no rel=”canonical” tag on the desktop content, but do on the mobile version and the latter also has a Vary header. Tests 3 & 4 have a different rel=”canonical” tags on desktop and mobile versions, the latter also includes a Vary header. Tests 5 & 6 return HTTP 403 (Forbidden) to Googlebot, Googlebot (smartphone) can crawl the URLs which both include a rel=”canonical” tag, the latter also has a Vary header.

Results:

The desktop URLs of test 1 and 2 were indexed, ignoring the rel=”canonical” served to Googlebot (smartphone). The desktop URLs of test 3 and 4 were indexed, ignoring the rel=”canonical” tag served to Googlebot (smartphone). Test URLs 5 and 6 which served HTTP 403 response codes to Googlebot were not indexed and Googlebot (smartphone) didn’t crawl those URLs.

Test 6

Aim: Determine if HTTP X-Robots-Tag noindex headers served to Googlebot (smartphone) are actioned.

Implementation:

Tests 1 & 2 use dynamic serving and have HTTP X-Robots-Tag noindex response headers added to the mobile version with test 2 also including a HTTP Vary response header.

Results:

Both desktop URLs were indexed, the HTTP X-Robots-Tag noindex response header was ignored when served to Googlebot (smartphone).

Test 7

Aim: Determine if HTTP Link rel=”canonical” response headers served to Googlebot (smartphone) are actioned.

Implementation:

Tests 1 & 2 use dynamic serving and include a HTTP Link rel=”canonical” response header in the mobile version of the page, the latter also includes HTTP Vary response headers.

Results:

The desktop URLs of both tests were indexed, ignoring the HTTP Link rel=”canonical” response headers served to the mobile versions. Like Test 5 above that was testing rel=”canonical” meta tags, the presence of the HTTP Link rel=”canonical” response header did trigger Googlebot (smartphone) to visit the referenced canonical URL.

Test 8

Aim: Determine if anchor text seen by only Googlebot (smartphone) has an impact on rankings.

Implementation:

Tests 1 & 2 use dynamic serving, the latter also has HTTP Vary headers. The mobile versions of each test link to a URL not seen by Googlebot with anchor text unrelated to the content on the linked URL. The unique URL linked to by the mobile versions of the content is accessible to Googlebot (smartphone), however Googlebot will receive a HTTP 403 (Forbidden) error trying to crawl the unique URLs.

Results:

The desktop URLs for each test were indexed, content served into the mobile specific versions couldn’t be queried. Like earlier tests, the HTTP 403 response code served to Googlebot for the unique mobile URLs meant those URLs weren’t indexed.

Conclusion

Now that the tests have been completed, it is helpful to have a much better understand of the role of Googlebot (smartphone) and its capabilities now that smartphone crawling has been moved over to Googlebot.

The key take away points from the above:

- Simply adding a HTTP Vary response header on its own didn’t appear to have an impact on crawl rate or the outcomes of any subsequent tests. However, it should be added when user agent detection is being used as it is a strong recommendation from Google and it represents best practice to help intermediate HTTP caches on the internet.

- Googlebot (smartphone) is being used for URL discovery, via in content, meta rel=”canonical” and HTTP Link rel=”canonical” response headers.

- Googlebot (smartphone) appears to ignore meta robots noindex, HTTP meta robots noindex response headers, meta rel=”canonical” and HTTP Link rel=”canonical” directives. While an exhaustive list of all possible options was not tested, it is reasonable to assume that if common directives like meta noindex are ignored (something that Google will always honour via Googlebot), that all other meta style directives will also be ignored.

- Googlebot (smartphone) does not appear to index unique content that is processes via dynamic serving. It does not appear as though it is possible to query Google and return that unique content via a desktop browser or mobile device.

- Googlebot (smartphone) appears to be used primarily for understanding web site user experience (ie, does website X provide a mobile experience) and optimising the user experience where possible (ie, skipping redirects leading to m.domain.com, returning the correct URLs in search results via rel=”alternate” meta tags).

- Despite the amazing growth of mobile, it appears that crawling, indexing and ranking is largely based upon data processed by Googlebot.

I use

I use

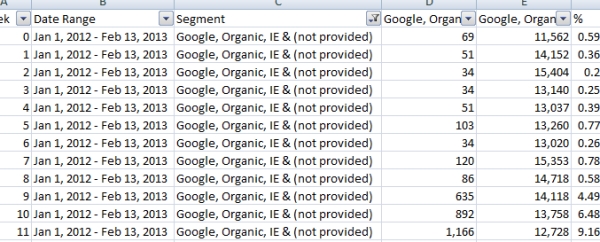

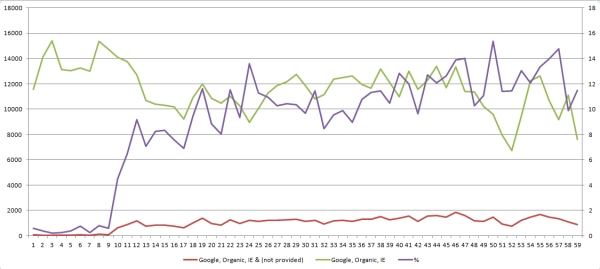

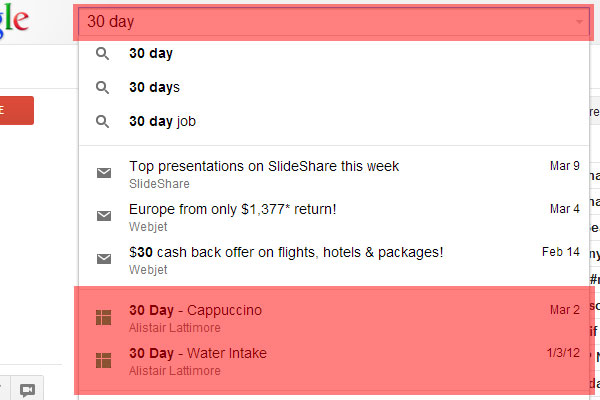

Measuring how fast Google Account’s are growing without being on the inside of Google is a little tricky. No one network provider or ISP can see all traffic on the internet, which means that anytime someone outside of Google makes a claim about the size of Google or any other business on the internet – it is a guess, educated maybe but a guess none the less. Compounding that fact is that if a user has a Google Account, there is a good chance that they’ll be logged into it – which means that the users internet traffic is encrypted between them and Google, making it a little harder again for network providers or third parties to provide an accurate figure.

Measuring how fast Google Account’s are growing without being on the inside of Google is a little tricky. No one network provider or ISP can see all traffic on the internet, which means that anytime someone outside of Google makes a claim about the size of Google or any other business on the internet – it is a guess, educated maybe but a guess none the less. Compounding that fact is that if a user has a Google Account, there is a good chance that they’ll be logged into it – which means that the users internet traffic is encrypted between them and Google, making it a little harder again for network providers or third parties to provide an accurate figure.