Recently Dan Petrovic published an excellent article titled The Great Link Paradox which discusses the changing behaviour of website owners regarding how they link from one page to another, the role Google is playing in this change and their fear mongering.

The key takeaway from the article is a call to action for Google:

At a risk of sounding like a broken record, I’m going to say it again, Google needs to abandon link-based penalties and gain enough confidence in its algorithms to simply ignore links they think are manipulative. The whole fear-based campaign they’re going for doesn’t really go well with the cute brand Google tries to maintain.

I’d like to talk about the following in the above quote, simply ignore links they think are manipulative but before that, let’s take a step back.

Fighting Web Spam

As Google crawls and indexes the internet, ingesting over 20 billion URLs daily, their systems identify and automatically take action on what they consider to be spam. In the How Google Search Works micro-site, Google published an interesting page about their spam fighting capabilities. Set out in the article are the different types of spam that they detect and take action on:

- cloaking and/or sneaky redirects

- hacked sites

- hidden text and/or keyword stuffing

- parked domains

- pure spam

- spammy free hosts and dynamic DNS providers

- thin content with little or no added value

- unnatural links from a site

- unnatural links to a site

- user generated spam

In addition to the above, Google also use humans as part of their spam fighting tools through the use of manual website reviews. If a website receives a manual review by a Google employee and after review it is deemed to have violated their guidelines, a manual penalty can be applied to the site.

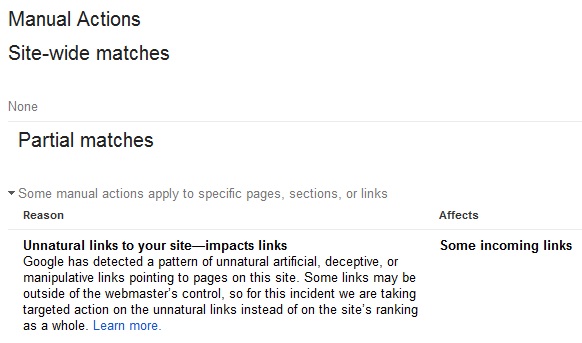

Manual penalties come in two forms, site-wide or a partial penalty. The former obviously effects an entire website, all pages are going to be subject to the penalty, while the latter might effect a sub-folder or an individual page within the website.

What the actual impact of the penalty is varies as well, some penalties might see an individual page drop in rankings, an entire folder drop in rankings, an entire site drop in rankings, it might only affect non-brand keywords, it could be all keywords or it might cause Google to ignore a subset of inbound links to a site – there are a litany of options at Google’s disposal.

Devaluing Links

Now that Google can detect unnatural links from and to a given website, the next part of the problem is being able to devalue those links.

Google has had the capacity to devalue links and has been able to do so since at least January 2005 when they announced the rel=”nofollow” attribute in an attempt to curb comment spam.

For the uninitiated, normally when Google discovers a link from one page to another, they will calculate how much PageRank or equity should flow through that specific link to the linked URL. If a link as a rel=”nofollow” attribute applied, Google will completely ignore the link, as such no PageRank or equity flows to the linked URL and it does not impact organic search engine rankings.

In addition to algorithmically devaluing links explicitly marked with rel=”nofollow”, Google can devalue links via a manual action. For websites that have received a manual review, if Google doesn’t feel confident that the unnatural inbound links are deliberate or designed to manipulate the organic search results, they may devalue those inbound links without penalising the entire site.

Google Webmaster Tools: Manual Action – Partial Match For Unnatural Inbound Links

Why Aren’t Google Simply Ignoring Manipulative Links?

At this stage, it seems all of the ingredients exist:

- can detect unnatural links from a site

- can detect unnatural links to a site

- can devalue links algorithmically via rel=”nofollow”

- can devalue links via a manual penalty

With all of this technological capability, why don’t Google simple ignore or reduce the effect of any links that they deem to be manipulative? Why go to the effort of orchestrating a scare campaign around the impact of a good, bad or indifferent links? Why scare webmasters half to death about linking out to a relevant, third party websites such that their readers are disadvantaged because relevant links aren’t forthcoming?

The two obvious reasons that immediately come to mind:

- Google can’t identify unnatural links with enough accuracy

- Google doesn’t want to

Point 1 above doesn’t seem a likely candidate, the Google Penguin algorithm which rolled out in April 2012 was designed to target link profiles that were low quality, irrelevant or had over optimised link anchor text. If they are prepared to penalise a website by Google Penguin, it seems reasonable to assume that they have confidence in identifying unnatural links and taking action on them.

Point 2 at this stage remains the likely candidate, Google simply don’t want to flatly ignore all links that they determine are unnatural, whether by accident of poorly configured advertising, black hat link building tactics, over enthusiastic link building strategies or simply bad judgement.

What would happen if Google did simply ignore manipulative inbound links? Google would only count links that their algorithms determined were editorially earned. Search quality wouldn’t change, Google Penguin is designed to clean house periodically through algorithmic penalties and if Google simply ignored the very same links that Penguin targeted – those same websites wouldn’t haven’t risen to the top of the rankings only to get cut down by Penguin at a later date.

Google organic search results are meant to put forward the most relevant, best websites to meet a users query. Automatically ignoring manipulative links doesn’t change the search result quality but it also doesn’t provide any deterrent for spammers. With irrelevant links simply being ignored, a spammer is free to push their spam efforts into overdrive without consequence and Google wants their to be a consequence for deliberately violating their guidelines.

How effective was the 2005 addition of rel=”nofollow” in fighting comment spam by removing the reward of improved search rankings – no impact. Spam levels recorded by Akismet, the free spam detection company by Automattic, haven’t eased since they launched – in fact comment spam levels are growing at an alarming rate despite the fact that most WordPress blogs have rel=”nofollow” comment links. The parallels between removing the reward for comment spam and removing the reward of spammy links is striking and it didn’t work last time – why would Google expect it to work this time?

Why aren’t Google ignoring unnatural links automatically?

Google doesn’t like being manipulated, period.

Agree.

Machine intelligence will never achieve human levels.

Don’t agree.

Google are interested in profits and spam generates profits.

Thanks for stopping by and leaving a comment Panos.

Machine intelligence might not reach human levels for a while, however when you’re analysing hundreds of millions of websites, billions of URLs and trillions of links – patterns begin to emerge that a human couldn’t spot and I think that is where the power of Google’s systems lie in general.

I don’t believe that Google aren’t fighting back against spammers because it is making them money. There are plenty of examples in the past where Google have made decisions that have had negative impacts on its profits but it has forged ahead with them regardless, such as automatically removing pure spam, banning advertisers for deceptive adverting practices or participating in arbitrage.